How to we know that a scientific analysis is valid? When a pharmaceutical study says that a new drug is effective, how is it that we're sure? Scientists use p, a statistical confidence technique. The lower the P-Value, the more confident the scientist is that the results are not random, but reflect the hypothesis under test. Generally speaking, to have a valid result, the p value has to be equal to or less than 0.05; this is sometimes known as "95% Confidence". We see it all the time in scientific papers, and indeed, you're very unlikely to see any paper get published where p > 0.05.

The implication is that scientists have a strong motivation to try different analysis techniques on their data, looking for one that will give a p Michael Mann and his colleagues in the Climate science community were busted for statistical shenanigans regarding the "Hockey Stick" graph, made famous in Al Gore's film An Inconvenient Truth. You don't hear about the Hockey Stick any more (except as a term of derision, a punch line) because Steve McIntyre and Ross McKitrick showed pretty conclusively that Mann's analysis resulted in p > 0.05. Oops.

OK, technically, it wasn't p, it was R2. It amounts to the same thing - the statistical confidence interval. The question is "is this statistically significant, in other words unlikely to have occurred by chance?" With Mann and company, the answer was "no".

The interesting thing is that Mann's paper was peer-reviewed, and was published in Nature. That's pretty prestigious, or at least it used to be. How'd this happen?

It turns out that there are many ways to crunch the data. Some are beyond reproach. Some are beyond reproach only when used a particular way. William M. Briggs (Statistician to the Stars!) shows you how the scientific study sausage is made:

This is a great introduction - you don't have to understand any math or statistics at all to follow along. If you've been following my posts about the science of climate change, or if you're concerned about how new drugs are developed by the Pharmaceutical industry, this is required reading.But that only works if you find a small p-value. What if you don’t? Do not despair! Just because you didn’t find one with regression, does not mean you can’t find one in another way. The beauty of classical statistics is that it was designed to produce success. You can find a small, publishable p-value in any set of data using ingenuity and elbow grease.

For a start, who said we had to use linear regression? Try a non-parametric test like the Mann-Whitney or Wilcoxon, or some other permutation test. Try non-linear regression like a neural net. Try MARS or some other kind of smoothing. There are dozens of tests available.

...

In short, if you do not find a publishable p-value with your set of data, then you just aren’t trying hard enough.

But if you'd rather watch an expert in statistical use and misuse instead, don't despair. S. Stanley Young from the National Institute of Scientific Study walks you through it, although you should plan on spending 45 minutes in his lecture (more, if you want to listen to the Q&A).

Everything is Dangerous: Stan Young's pizza lunch lecture from elsa youngsteadt on Vimeo.

It may be Humbug, but we're confident that it's quality Humbug (p < 0.05).

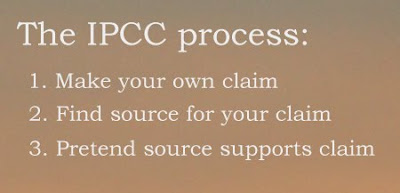

There's a point here, and it's an important one. Michael Mann refused to release his data and methods, even to a Congressional Inquiry. His study was publicly-funded, and he still wouldn't give the government his data. Phil Jones seems to be in criminal violation of the UK Freedom Of Information Act for refusing to release his data. The whole IPCC process has an odor to it.

There's a huge debate going on right now, where scientists are grappling with the question of how to restore public trust in science. Here's a hypothesis for you: the public will not - and dare I say should not - trust sensational scientific results (i.e. ones where scientists stand to get new research grants, tenure, etc) if the data and methods are not published. The temptation to make not science, but scientific sausage is plausible (p < 0.05). Trust can never be claimed - only shown.

1 comment:

Public trust in science can and should only be restored when scientists are no longer idolotrized. Idealizing someone or some profession is fine, but anointing them a mantle of priesthood is an abdication of intellectual responsibility. Scientists are people.

Also, not everything is possible for a layman to understand, of course, but not everything is impossible to present in a coherent, non-jargony way.

Give me a Sagan, or a Bakker, or a Harris, or a Feynman, or a Gould - I don't agree that everything these guys say or said is correct just because they're scientists, but I can understand what they're saying.

Finally, don't dismiss simple common sense in the macro world (although it has almost no place in the quantum or cosmological worlds). If you grow up knowing and living that down is down and some guy in a lab coat with a clipboard wants to sell you some tickets to an "up is down" ride, stand your ground and ask for proof. Real proof. And keep your eyes open for cheats, charletans, and dumbassery.

Post a Comment